DeepMind said both new Gemini models enable a variety of robots to perform a wider range of tasks than ever before.

The post Google DeepMind introduces two Gemini-based models to bring AI to the real world appeared first on The Robot Report.

Google’s robotics team applies expertise in machine learning, engineering, and physics simulation to address challenges facing the development of AI-powered robots. | Source: DeepMind

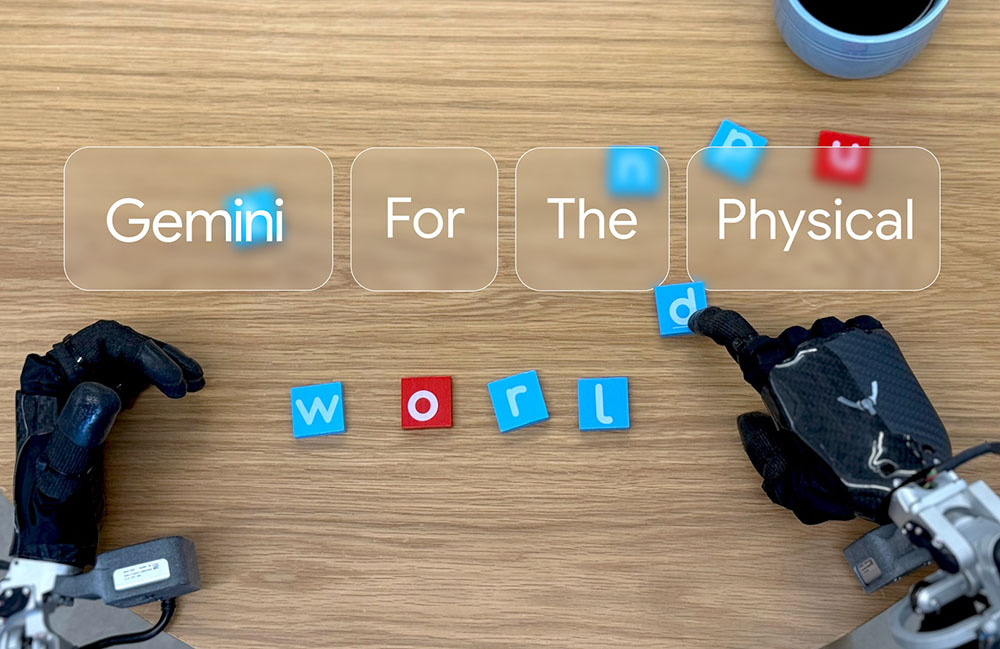

Google DeepMind today introduced two new artificial intelligence models: Gemini Robotics, its Gemini 2.0-based model designed for robotics, and Gemini Robotics-ER, a Gemini model with advanced spatial understanding.

DeepMind said it has been making progress in how Gemini solves complex problems through multimodal reasoning across text, images, audio, and video. Now, with these new models, it’s bringing those capabilities out of the digital and into the real world.

Gemini Robotics, is an advanced vision-language-action (VLA) model that was built on Gemini 2.0. It added physical actions as a new output modality for the purpose of directly controlling robots.

Gemini Robotics-ER offers advanced spatial understanding, enabling roboticists to run their own programs using Gemini’s embodied reasoning (ER) abilities.

DeepMind said both of these models enable a variety of robots to perform a wider range of real-world tasks than ever before. As part of its efforts, DeepMind is partnering with Apptronik to build humanoid robots with Gemini 2.0.

The Google unit is also working with trusted testers to guide the future of Gemini Robotics-ER. They include Agile Robots, Agility Robotics, Boston Dynamics, and Enchanted Tools.

Register now so you don’t miss out!

How to make AI useful in the real world

According to a DeepMind blog post, to be useful and helpful to people, AI models for robotics need three principal qualities:

- They have to be general, meaning they’re able to adapt to different situations.

- They have to be interactive, so they can understand and respond quickly to instructions or changes in their environments.

- They have to be dexterous, meaning they can do the kinds of things people generally can do with their hands and fingers, like carefully manipulate objects.

While the organization‘s previous work demonstrated some progress in these areas, Gemini Robotics represents a substantial step in performance on all three axes.

DeepMind emphasizes generality and interactivity

Gemini Robotics uses Gemini’s world understanding to generalize to novel situations and solve a wide variety of tasks out of the box, including tasks it has never seen before in training. Gemini Robotics is also adept at dealing with new objects, diverse instructions, and new environments, asserted Google.

It said that on average, Gemini Robotics more than doubles performance on a comprehensive generalization benchmark compared with other VLA models.

In addition to genreality, interactivity is key. To operate in our dynamic, physical world, robots must be able to seamlessly interact with people and their surrounding environment, and adapt to changes on the fly.

Because it’s built on a foundation of Gemini 2.0, DeepMind said Gemini Robotics is intuitively interactive. It taps into Gemini’s advanced language capabilities and can understand and respond to commands phrased in everyday conversations and in different languages.

The model can understand and respond to a much broader set of natural-language instructions than previous models, adapting its behavior to user input, said DeepMind. It also continuously monitors its surroundings, detects changes to its environment or instructions, and adjusts its actions accordingly. This kind of control, or “steerability,” can better help people collaborate with robot assistants in a range of settings, from home to the workplace, the company said.

Robots of all shapes and sizes require high dexterity

DeepMind said the third key pillar for building a helpful robot is acting with dexterity. Many everyday tasks that humans perform effortlessly require fine motor skills and are still too difficult for robots.

By contrast, Gemini Robotics can tackle extremely complex, multi-step tasks that require precise manipulation, such as origami folding or packing a snack into a Ziploc bag, it explained.

In addition, DeepMind said it designed Gemini Robotics to adapt to robots of different form factors. The company trained the model primarily on data from the bi-arm robotic platform, ALOHA 2, but it also demonstrated that the model could control a two-armed platform based on the Franka arms used in many academic labs.

DeepMind noted that Gemini Robotics can also be specialized for more complex embodiments, such as the humanoid Apollo robot developed by Apptronik, with the goal of completing real-world tasks.

Gemini Robotics-ER focuses on spatial reasoning

Gemini Robotics-ER enhances Gemini’s understanding of the world in ways necessary for robotics, focusing especially on spatial reasoning. It also allows roboticists to connect it with their existing low-level controllers. DeepMind said the model significantly improves Gemini 2.0’s existing abilities, such as pointing and 3D detection.

Combining spatial reasoning and Gemini’s coding abilities, Gemini Robotics-ER can instantiate entirely new capabilities on the fly, DeepMind claimed. For example, when shown a coffee mug, the model can intuit an appropriate two-finger grasp for picking it up by the handle and a safe trajectory for approaching it.

Gemini Robotics-ER can perform all the steps necessary to control a robot right out of the box, including perception, state estimation, spatial understanding, planning, and code generation, according to Google. In such an end-to-end setting, the model is two to three times more successful than Gemini 2.0.

Where code generation is not sufficient, Gemini Robotics-ER can tap into the power of in-context learning, following the patterns of a handful of human demonstrations to provide a solution.

DeepMind considers robot safety in Gemini approach

DeepMind said that as it explores the potential of AI and robotics, its taking a layered, holistic approach to addressing safety, from low-level motor control to high-level semantic understanding.

Gemini Robotics-ER can interface with “low-level” safety-critical controllers to do things like avoiding collisions, limiting the magnitude of contact forces, and ensuring the dynamic stability of mobile robots.

Building on Gemini’s core safety features, the organization enables Gemini Robotics-ER models to understand whether or not a potential action is safe to perform in a given context, and to generate appropriate responses.

DeepMind seeks to further research with new dataset

To advance robotics safety research across academia and industry, DeepMind also released a new dataset to evaluate and improve semantic safety in embodied AI and robotics. In previous work, it showed how a “Robot Constitution” inspired by Isaac Asimov’s Three Laws of Robotics could help prompt a large language model (LLM) to select safer tasks for robots.

The organization has since developed a framework to automatically generate data-driven constitutions – rules expressed directly in natural language – to steer a robot’s behavior. This framework would allow people to create, modify, and apply constitutions to develop robots that are safer and more aligned with human values.

Finally, the new ASIMOV dataset will help researchers to rigorously measure the safety implications of robotic actions in real-world scenarios, said DeepMind.

The post Google DeepMind introduces two Gemini-based models to bring AI to the real world appeared first on The Robot Report.